We have entered what the industry is calling the "Agentic Wars." In December 2025, Meta paid over $2 billion to acquire Manus, a nine-month-old startup that had built something Silicon Valley now considers essential: an AI that doesn't just talk, but acts.

ChatGPT and its peers answer questions. Browser agents like Manus, Claude in Chrome, OpenAI's Atlas, and Perplexity's Comet actually do things - they click buttons, fill forms, send emails, book flights, analyse documents and execute multi-step workflows while you do something else.

For time-poor executives, this sounds transformative. In reality, it's not as cool as that. It's still pretty clunky and yesterday Claude in Chrome deleted four sections of my new website - theproposition.co.

So watch out, there are risks. You have been warned. Here we go...

The Browser Agent Market

The browser agent space has consolidated rapidly. Anthropic offers Claude in Chrome (currently in beta for Max subscribers), OpenAI launched ChatGPT Atlas in October 2025, and Perplexity released Comet. Microsoft has integrated Copilot into Windows and Edge, while Google has embedded Gemini into Chrome.

I personally loved Manus when I first saw it, but the more I learned about it, the less comfortable I was using it. The access it can get to your data was one thing. Its unclear data sovereignty was the other.

Meta's Manus acquisition signals where the big money sees the future. Manus hit $100 million in annual recurring revenue within eight months of launch, reportedly the fastest any startup has achieved that milestone. The company processed over 147 trillion tokens and created more than 80 million virtual computers for user tasks before Meta bought it.

The technology works, but I still wonder if it saves time when you look at the time you spend messing around to get the right result.

What Makes This Different

Traditional automation required explicit programming. Browser agents use natural language and visual understanding to navigate interfaces designed for humans. You describe what you want; the agent figures out how to accomplish it.

This generality is both the breakthrough and the vulnerability.

Anthropic's research team puts it plainly: "For AI agents to be genuinely useful, they need to be able to act on your behalf - to browse websites, complete tasks, and work with your context and data. But this comes with risk: every webpage an agent visits is a potential vector for attack."

It's like when you give your PA the keys to your email and personal life - you can never be 100 percent sure it's safe.

The Security Problem Nobody Has Solved

In December 2025, OpenAI published an unusually candid assessment: prompt injection attacks against browser agents "may never be fully solved." The UK's National Cyber Security Centre issued similar warnings. Gartner went further, advising CISOs to block AI browsers entirely for now.

Imagine this scenario - an attacker embeds hidden instructions in a webpage. The instructions might be invisible to humans - white text on a white background, for instance, but the AI processes them as commands.

If the agent follows those instructions instead of the user's, it can forward sensitive emails, make purchases, change settings or exfiltrate data. Suddenly the agent is no longer working for you.

Suddenly the agent is no longer working for you.

Security researchers demonstrated the problem within hours of Atlas launching. A few words hidden in a Google Doc could hijack the browser's behaviour. Brave's security team found they could embed attack instructions in screenshots using faint text that humans couldn't see but AI could read perfectly.

OpenAI's own testing revealed that their reinforcement-learning-trained attacker could "steer an agent into executing sophisticated, long-horizon harmful workflows that unfold over tens (or even hundreds) of steps."

Why This Is Different From Normal Cybersecurity

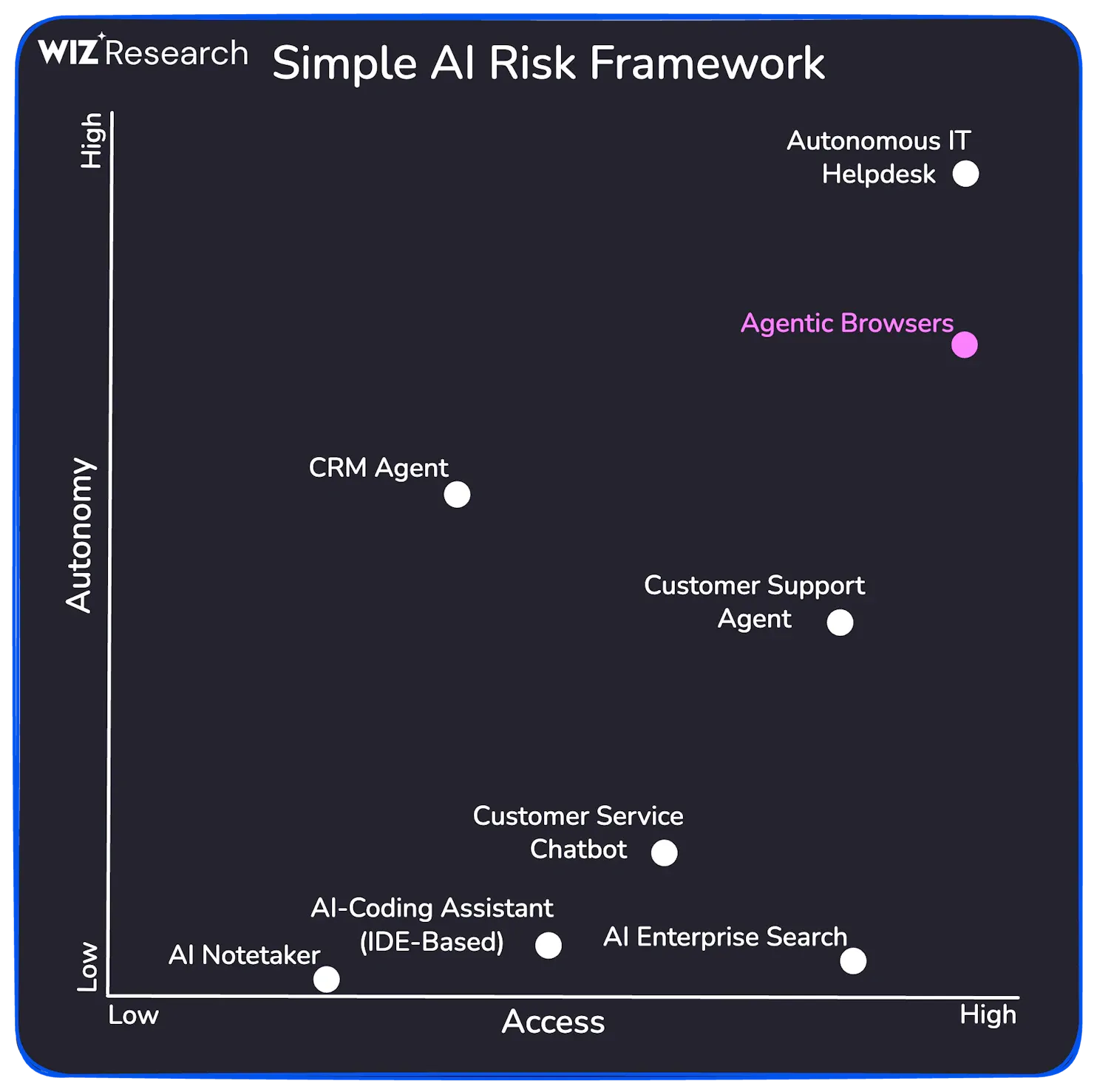

Rami McCarthy, principal security researcher at Wiz, offers a useful framework:

"A useful way to reason about risk in AI systems is autonomy multiplied by access."

Browser agents occupy the most dangerous quadrant. They have high autonomy - they make decisions and take actions independently. They have high access - your logged-in sessions, your email, your files, potentially your banking.

A malicious instruction on any webpage can trigger actions across your bank, email, healthcare portal and corporate systems.

What The Vendors Are Doing

Anthropic claims Claude Opus 4.5 has reduced successful prompt injection attacks to roughly 1% - a significant improvement, but still meaningful risk at scale. They use reinforcement learning to train Claude to recognise and refuse malicious instructions, even when designed to appear authoritative or urgent.

OpenAI has built an "LLM-based automated attacker" - essentially an AI trained to find vulnerabilities before external hackers do. They've implemented "logged out mode" (the agent can't access your authenticated sessions) and "watch mode" (requiring explicit confirmation for sensitive actions).

All vendors recommend the same mitigation: give agents specific instructions rather than broad mandates. "Take whatever action is needed" invites exploitation.

Practical Implications

For organisations considering deployment, Wiz suggests three rules:

Isolate the context. Use dedicated, separate browser profiles that don't share credentials with your primary work email or banking.

Preserve the human. Never disable confirmation requirements when working with a privileged agent session. The inconvenience is the protection.

Limit the blast radius. Restrict agent use to low-stakes tasks - research, public data gathering - where a compromised action carries lower cost.

The Deeper Question

While it's exciting times out there, it might be better to sandbox this one or wait to see what happens for a while.

Browser agents represent a fundamental shift in how humans interact with computers. We are delegating authority to systems that weren't designed with strong isolation or clear permission models.

We are not yet at the point where you can tell an AI to manage your inbox and walk away.

The question is whether the partial automation - the agent that handles 80% of the task while you supervise the other 20% - delivers enough value to justify the vigilance it requires.

For me, not yet.

If you have any questions, I run an Open Office slot at Monday 2pm UK every week if you'd like to come and ask me anything.

Video call link: https://meet.google.com/ojx-tjwh-bop